The world was introduced to ChatGPT-3, an artificial intelligence (AI)-backed chat bot, in November 2022. It is based on the GPT (Generative Pretrained Transformer) architecture, a type of transformer neural network. The model is trained on a large dataset of text, including books, articles, and websites, and learns to predict the next word in a sentence based on the context of the words that came before it. When given a prompt, the model generates text by sampling from the distribution of words it learned during training. The generated text is not a transcription of any specific text but rather a new text that

draws on the dataset it has access to.

There is little doubt that ChatGPT-3, and similar AI language model chat bots, are game-changers for a number of industries, including higher education. ChatGPT-3 is a state-of-the-art model in the field of natural language processing and has received significant attention in the tech community. It is a powerful tool for generating human-like text and is already being widely used in multiple industries, such as customer service, content creation, counseling, and research. ChatGPT-3 has been described as the most powerful and technologically disruptive language model tool to date.

ChatGPT-3 can access a vast amount of vocabulary and data, and it does a remarkable job of interpreting words by their

context. This helps ChatGPT-3 mimic speech patterns while conveying a seemingly encyclopedic knowledge. ChatGPT-3 can explain particle physics, write complex computer code, compose poems, or suggest a joke on command. Millions of people have accessed and used ChatGPT-3 since its November release. And while ChatGPT-3 is the first AI chatbot to be released to the public, other tech companies like Google and Meta are developing their own AI language model tools. Needless to say, colleges and universities are scrambling to adapt to a world in which students can, with a few keystrokes, prompt an AI software application to write a college essay for them.

Of course, academic dishonesty is nothing new to academia. Student cheating has been a challenge for educators since the beginning of formalized education, and cheating in the academic setting in the US has a long history, dating back to the

nine colonial colleges chartered before the American Revolution. In the 20th century, cheating became more prevalent as students felt increasing pressure to succeed in the competitive academic environment. These tendencies were only compounded by the rise of standardized testing. In the 21st century, the internet and easy access to information have further enabled student cheating. These temptations were exacerbated when students pivoted to remote learning during the COVID-19 pandemic.

Efforts to address academic cheating have included the use of plagiarism detection software and the implementation of

honor codes. Universities have also invested significant resources into writing centers and library infrastructure aimed at teaching students information literacy and writing skills. Despite these efforts, cheating remains a persistent problem in academic institutions in the United States. Many educators are concerned that AI chat technology will make it more difficult, if not impossible, for professors to catch plagiarism, and they have reason for concern. ChatGPT-3 can generate text that is difficult to distinguish from text written by humans, and, as of this writing, not even sophisticated plagiarism detection software can consistently recognize AI-generated scripts. In response to these concerns, several of the US’ largest secondary education school districts have banned ChatGPT-3 on their networks and devices. Likewise, many post-secondary educators are calling for institutions of higher education to ban ChatGPT-3.

Small nonprofit baccalaureate and master’s colleges and universities may be better equipped to navigate this technological frontier than larger institutions, especially those that approach education as a transaction or those that have developed competency-based models that allow students to complete courses with minimal interaction with their instructors. A transactional model of higher education views students merely as customers who pay for a service (education) provided by the institution. In this model, the focus is on providing students with a credential that will, theoretically, help them secure employment in the workforce rather than on broader intellectual development or the pursuit of knowledge for its own sake. The institution is responsible for delivering the educational product to the student, and the student is responsible for paying for the service and meeting certain academic standards. For the sake of cost-savings and convenience, many institutions of higher education have developed models of instruction that place the burden of learning completely on the learner, limiting professors to facilitating the delivery of course materials. What happens to this model, though, when the proof is no longer in the proverbial pudding? What kind of education has taken place when students submit AI-generated deliverables that mask the reality that students have no understanding or exposure to the course material?

In a TED Talk entitled, “The Agony of Trying to Unsubscribe,” comedian James Veitch describes his efforts to unsubscribe from an automated marketing email. When hitting the “unsubscribe” button proves ineffective in stemming the steady tide of emails from a certain solicitor, Veitch sets up an auto-replier designed to ping back a response to the unwanted emails. The solicitor’s auto-response to his auto-reply, of course, then sets in motion a series of auto-generated emails pinging each other in perpetuity. “It gives me immense satisfaction,” Veitch reflects, “to know that these computer programs are going to be pinging each other for eternity.” One might see in this comedic example a disturbing vision for the future of education—an endless interchange between scripted course content deliverables and AI-generated responses, both devoid of human investment and both working to devalue any credential that might be conferred upon students. The author of Ecclesiastes calls this “vanity”: the meaningless expenditure of energy.

The higher education landscape is increasingly crowded with institutions that are selling degrees rather than education, trading assignments for grades and papers for diplomas. These institutions rely on grading software, teaching assistants, and remote instructors to credential students. Such institutions have reason to be concerned about ChatGPT-3 and similar AI technologies. Society, as a whole, may have a greater concern. What will be the effect if institutions of higher learning credential hundreds of thousands of students who are severely lacking in competency?

Many institutions of higher education are currently in a panic because ChatGPT-3 is undermining the transactional model of education. Credentials from such institutions will, necessarily, become increasingly meaningless as they lose their ability to evidence learning. The future of higher education is not in automation or AI. These tools serve only to make clearer the differences between transactional and formational models of education.

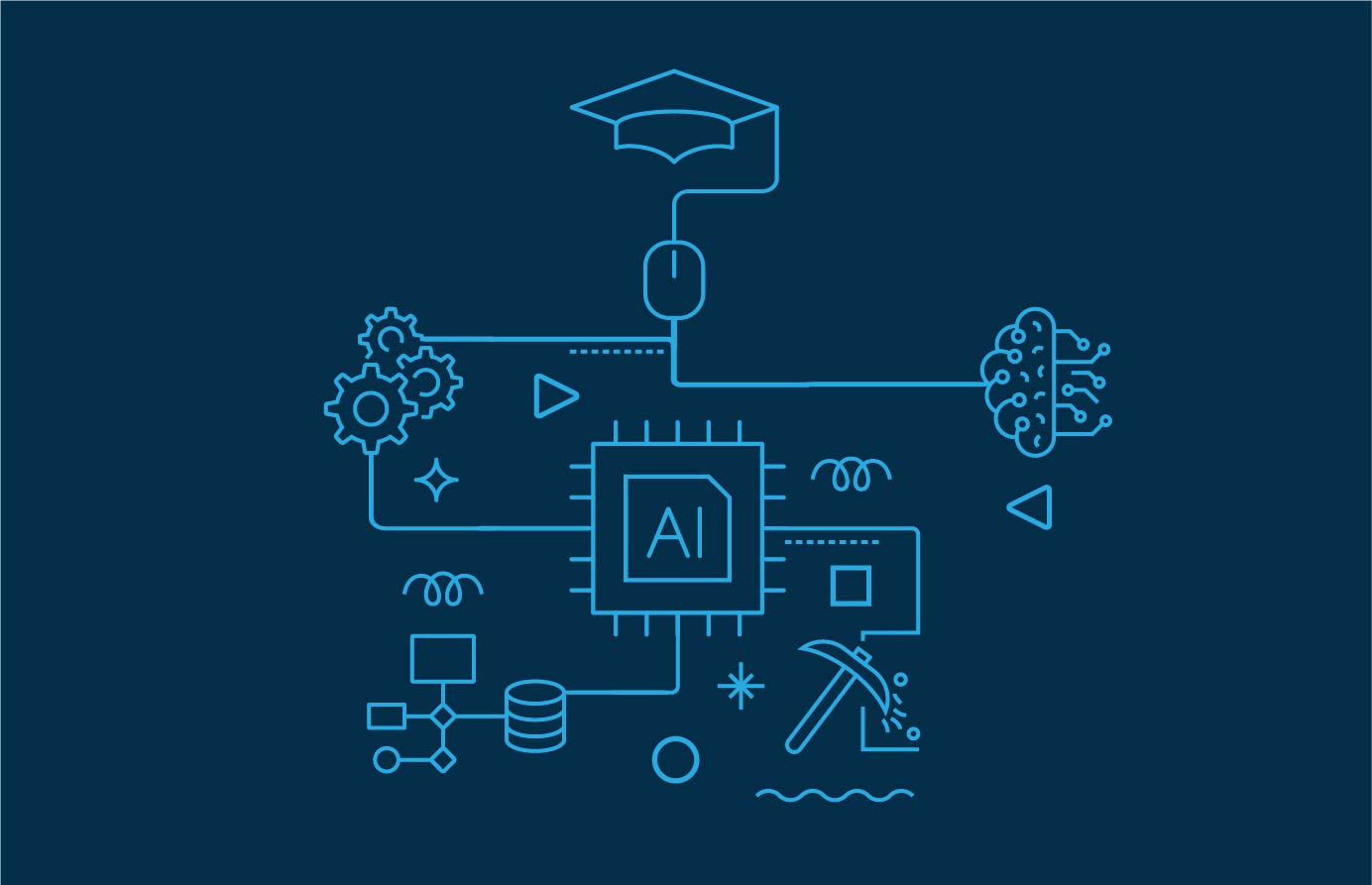

Formational education focuses on the development of the whole person, including the spiritual and moral aspects of an individual. It aims to shape students into responsible, mature, and well-rounded individuals who can contribute positively to society. One can see this formational commitment in Cairn University’s mission to “educate students to serve Christ in the church, society, and the world as biblically minded, well-educated, and professionally competent men and women of character.” This approach to education emphasizes personal growth and character development, often through experiences and student-faculty interactions that challenge students to think critically, reflect on their beliefs, and build a sense of higher purpose. Formational education seeks to prepare individuals for a meaningful life, not just for careers or jobs. This approach to education is, by necessity, highly personal and relational. As such, schools that take this formational approach have far less reason to be concerned about ChatGPT-3. A professor who knows the author of an assignment as a person—whose mind is developing as part of an educational relationship—is not likely to be tricked by the superficial (and impersonal) essays that are manufactured by AI.

Still, it is important to recognize that even in schools where student formation is a valuable element of the education process, the new AI tools present challenges. Faculty and staff will need to brainstorm and initiate changes to the student evaluation process. In-class learning and testing will necessarily become more important. Perhaps there will be more review and testing of works prepared outside of classrooms. What is clear is that educational integrity calls for a recommitment to the task of imparting knowledge to students. Students, likewise, will need to understand this new reality while recommitting to the importance of genuine education. They must acknowledge upfront that, however important a diploma may be to their future, their growth in knowledge, skills, and preparation is even more important.

Students need to direct their own learning, but they also need the guidance of invested and knowledgeable faculty. For institutions staffed by faculty who know and invest in their students, AI chatbots are not a threat. Rather, they present a new opportunity for faculty to encourage wisdom, discernment, critical thinking, and creativity in their students. Like anything created by human beings, technology is not morally neutral, and neither are the algorithms and data sets the chatbot relies on. The use of and interaction with AI necessitates wisdom, care, and a moral foundation on which to evaluate the AI’s output.

Furthermore, AI presents an opportunity for faculty, with renewed vigor, to explore with students what it means to be human. AI is not, nor should it ever be, a substitute for human wisdom, care, or relationship. The Word of God makes it very clear that humans are uniquely created in the image of God (Imago Dei) and distinct from machines. God took special care in the creation (formation) of man. God breathed in man’s body the breath of life and man became a living being, and only humans (male and female) are made in God’s image as spiritual, self-conscious, and self-determined beings capable of reason, emotion, ethical decision-making, and aesthetic judgment. Faculty committed to genuine education will teach with this dynamic understanding of the nature of the Imago Dei. This biblically informed ontological understanding of human learning has little to fear from an AI tool that, at best, can only mimic human language.

New tools have, throughout history, meant that human tasks can be accomplished more easily. This has often been for the good of people, opening doors for a generation of better products while freeing workers to focus on other tasks. AI is a new tool. We must see to it that it is used to enhance learning at our institutions rather than allow it to undermine educational integrity. Educators and students must be committed to working toward that end, clear of its necessity and confident in its possibility.